Once we got our service exposed to the internet from previous blog, now it's time to automate things up.

In short, we must

- Agile Project Planning using kanban, scrum and meeting all requirements and steps with Jira, Confluent, Github Projects, etc

- Code usually in an organization repository using Git as version control system

- Build using some build tool eg. SBT, Maven, YARN, etc in Contiuous Integration with git

- Run A/B testing, unit tests, coverage, etc

- Release on NPM, Artifactory, Github release, etc

- Continuous Deployment to any Platform as a Service (eg. Heroku, AWS Beanstack, Vercel..) , to an app server (gunicorn, tomcat..), etc.

- Operate on IT enabling resources, creating VMs, managing access, canary deployments, chaos engineering...

- Monitor creating post-mortem, cost analysis, performance metrics..

- Plan again..

Do you apply these steps in your organization? Then you're applying Site Reliability Engineering!

As you may already know, DevOps emerged to help close gaps and break down silos between development and operations teams, automating the process of software delivery and infrastructure changes. DevOps is a philosophy, not a development methodology or technology. SRE is a practical way to implement DevOps philosophy.

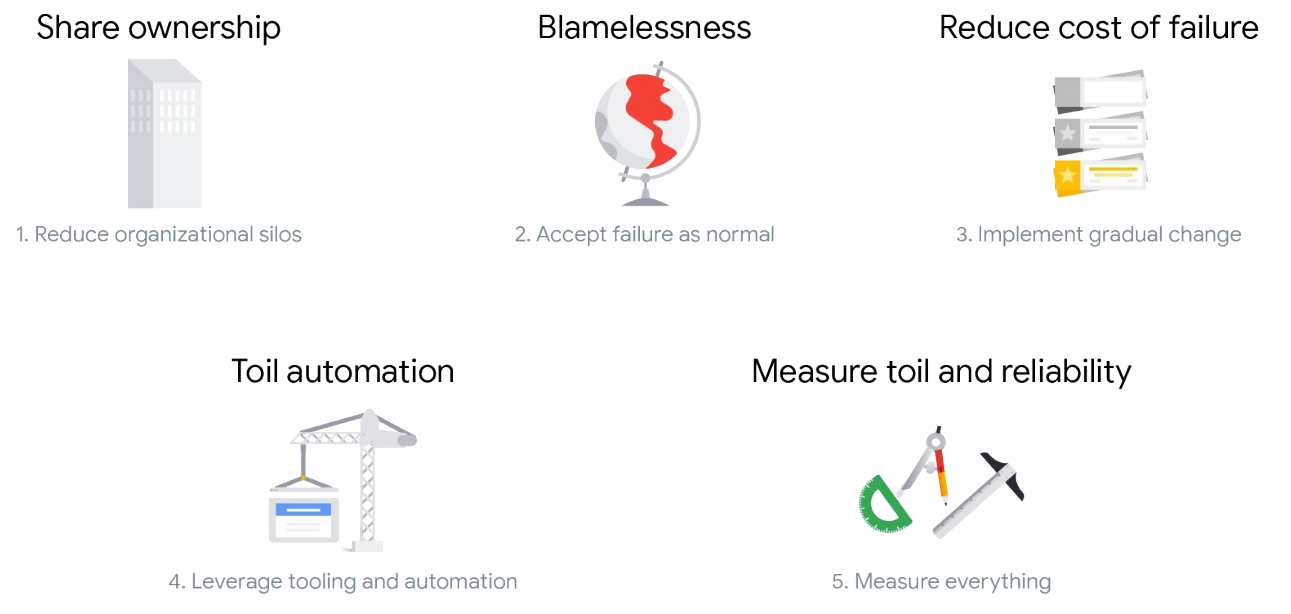

SRE consists of both technical and cultural practices. SRE practices align to DevOps pillars in:

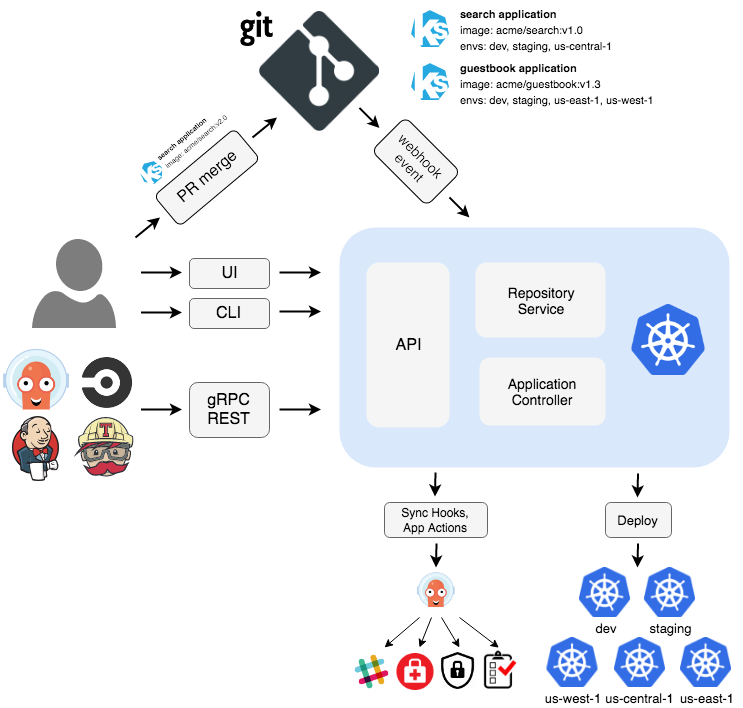

But you may wonder, aren't we automating IT, then how can automate our kubernetes deployments while we build our portfolio code then? The answer is applying GitOps, so that our desired state for our cluster lives in a repo, as the unique source of truth. Kubernetes controllers will sync it to replicate it in production in an automated way. Popular GitOps choices are ArgoCD and FluxCD. They're in the Cloud Native Cloud Foundation and integrates an provides continuous delivery for Kubernetes. So let's see how to sync applications from git to kubernetes deployments using ArgoCD.

Before proceeding to install ArgoCD, we must create a repo, it can be private too. Ideally with the following structure:

.

├── apps.yaml

├── argo-cd

├── argo-events

├── argo-rollouts

├── argo-workflows

├── production

├── projects.yaml

├── sealed-secrets

└── stagingIn the tree of the repo we define 4 folders containing ArgoCD projects. ArgoCD is charge of continuous delivery. Argo-workflows let us create orchestrate pipelines with custom logic, such as running ETL jobs or build and deploy some application when a commit is synced. Argo-events let us create event-driven workflows, for instance notify to a slack channel the metrics of our app based on an event. Argo-rollouts let us promote and rollback deployments automatically based on judgements, apply canary deployments, etc.

It is also suggested to use git submodules, as we can fine-tune our deployments on top of remote project repositories, this can be done by issuing:

git submodule add [email protected]:argoproj/argo-cd.git argo-cd/base/argo-cdThis way we can open argo-cd/base/argo-cd and fetch a tag from upstream and work over that release version.

To "work over" literally means customize it for our own purposes, and in Kubernetes this is widely done by kustomize. You can run kustomize if there is a kustomization.yaml or kustomization.yml file by running

kubectl apply -k path-to-kustomization-yamlA kustomization yaml usually includes the following:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- ../../base

- ingress-traefik.yaml

patchesStrategicMerge:

- argocd-rbac-cm.yaml

- argocd-server-deployment-patch.yamlWhere all base resources are merged with new customizations, the names inside the patches must match Resource names that are already loaded. Alternatively you can view the changes before applying the kustomization by running

kubectl diff -k pathnameAnother way to run kustomizations is by setting values on a helm chart. Helm charts are also quite popular as it usually comes with nice documentation for customization and an easy setup with the mininal configuration ready to deploy by running

helm install -n namespace . --values=values.yaml --set myvalue=valueYou can also check all available configurations of a helm chart by running

helm show values . Or check the default config setup and piping to extract the full manifiest of the deployment and tune it to your needs eg.

helm template mydeployname . | tee deployment.yamlLast but not least, github pages can act as a helm repository for your helm artifacts.

For the sake of simplicity, we'll be using kustomize here.

First we define our AppProject, one for production and another for staging in our projects.yaml

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: production

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

description: Production project

sourceRepos:

- '*'

destinations:

- namespace: '*'

server: https://kubernetes.default.svc

clusterResourceWhitelist:

- group: '*'

kind: '*'

namespaceResourceWhitelist:

- group: '*'

kind: '*'

---

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: staging

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

description: Staging project

sourceRepos:

- '*'

destinations:

- namespace: '*'

server: https://kubernetes.default.svc

clusterResourceWhitelist:

- group: '*'

kind: '*'

namespaceResourceWhitelist:

- group: '*'

kind: '*'Next our production and staging Applications, that will create an Apps of Apps pattern from our apps.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: production

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: production

source:

repoURL: your-repo-here

targetRevision: HEAD

path: production

destination:

server: https://kubernetes.default.svc

namespace: production

syncPolicy:

automated:

selfHeal: true

prune: true

---

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: staging

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: staging

source:

repoURL: your-repo-here

targetRevision: HEAD

path: staging

destination:

server: https://kubernetes.default.svc

namespace: staging

syncPolicy:

automated:

selfHeal: true

prune: true

syncOptions:

- CreateNamespace=trueNote all the sync options available

Path and targetRevision will match the HEAD commit and the path of the application, in our case under production and staging folders will have many applications manifests pointing to other repos and to the forementioned Argo submodule kustomizations (argo-cd, argo-workflows..).

production

├── argo-cd.yaml

├── argo-events.yaml

├── argo-rollouts.yaml

├── argo-workflows.yamlAs an example, argo-cd.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: argo-cd

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: production

source:

path: argo-cd/overlays/production

repoURL: your-repo-here

targetRevision: master

destination:

server: https://kubernetes.default.svc

syncPolicy:

automated:

selfHeal: true

prune: trueSo now we push our changes to our repo, and spin up argo-cd with

kustomize build argo-cd/overlays/production | kubectl apply --filename -Finally in order to access the UI and manage the cli it is suggested to install the argocd cli

export PASS=$(kubectl -n argocd get secret argocd-initial-admin-secret --output jsonpath="{.data.password}" | base64 --decode)

argocd login --insecure --username admin --password $PASS --grpc-web argoingress.your.domain

argocd account update-password --current-password $PASS --new-password newpasswordFinally to work with private repos you to provide ArgoCD the user and token access by:

argocd repo add your-private-repo --username user --password authtokenIt is strongly suggested that you setup your container registry credentials for a service account in kubernetes. Else you may run into container registry limits and forbidden access to your private container registry. In ArgoCD we are using the defautl service account, but in the next episode will be using a different service account for argo-workflows.

Tags